Case Study

Designing for Trust Through Language

How language decisions shape user behavior, comprehension, and outcomes in high-stakes flows.

Principles for Designing Trust Through Language

- Trust is established before a system demonstrates capability.

- Language sets emotional safety before it gathers information.

- Cognitive load must be managed explicitly in high-stress moments.

- Users should feel guided, not interrogated.

- Complexity should be revealed only when it becomes relevant.

Language Patterns in Trust-Critical Systems

Orient the User Before Routing

Make it clear what kind of help the system can offer before asking the user to choose a path.

Progressive Disclosure Under Stress

Break complex requirements into steps so users are not asked to process everything at once.

Context Before Resolution

Collect the minimum context needed before attempting to resolve the issue or apply policy.

Language as a Pacing Tool

Use wording to control the speed of the interaction when users are overwhelmed or anxious.

Agency Without Abandonment

Offer choices while continuing to guide the user, rather than handing off complexity.

Patterns in Practice

The following examples show how the same trust principles and language patterns apply in different systems and constraints.

Each case begins from a different starting condition, but uses the same approach: orient the user, manage cognitive load, and gather context before acting.

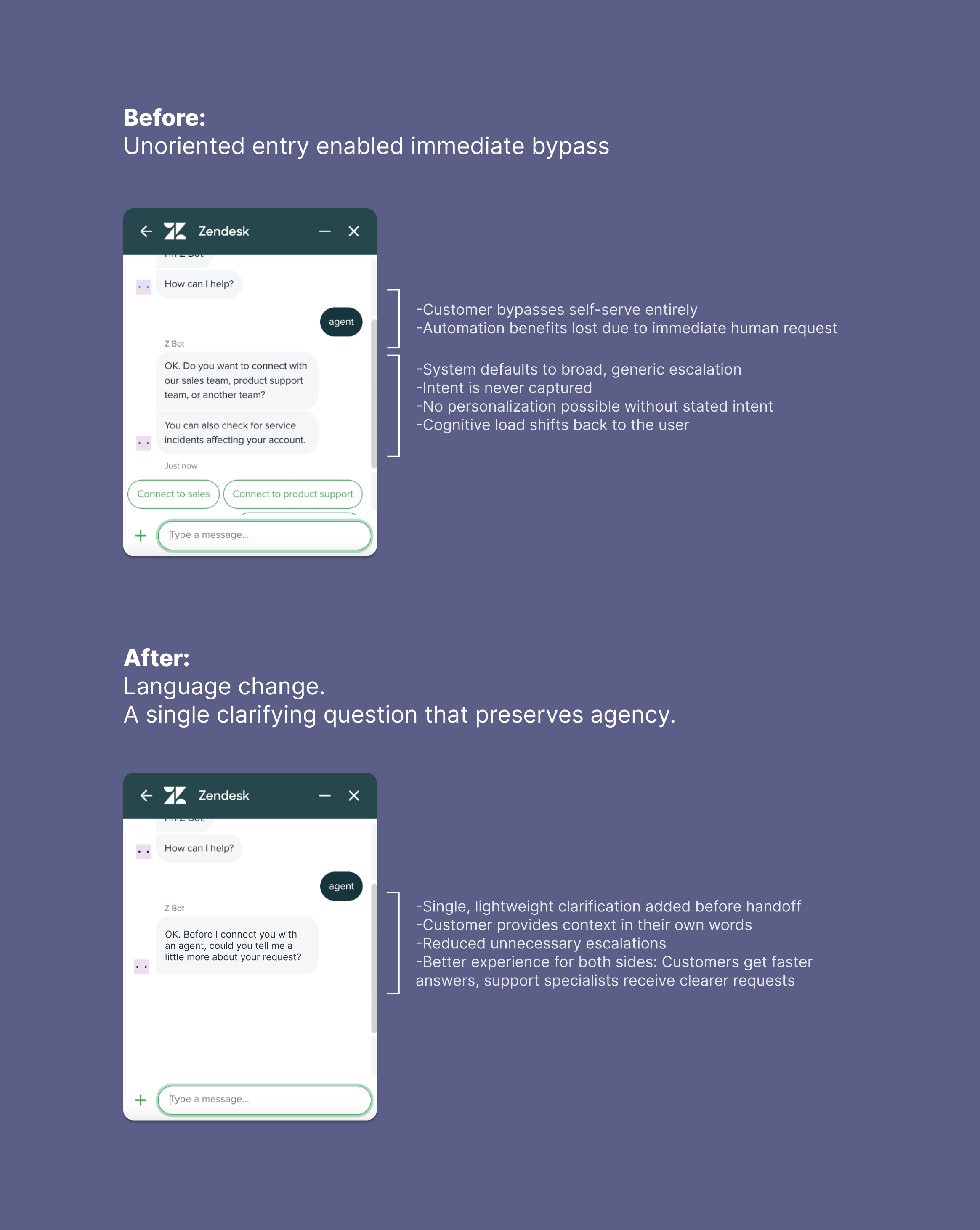

Case Example: Premature Human Bypass

Support system, Zendesk (abstracted)

At Entry

- Users entered chat with a learned habit of requesting a human immediately

- Prior experiences reinforced the belief that self-serve would not help

- The system had no opportunity to capture intent or offer relevant guidance

- Escalation was available, but context was missing

Language Risks

- Immediate escalation prevented the system from capturing intent

- Self-serve options remained unused despite being available

- Specialists received simple requests without context

- The system reinforced avoidance behavior rather than engagement

Applied Patterns

Orient the User Before RoutingThe system established what kind of help was available before asking users to identify themselves.

Context Before ResolutionA brief prompt gathered intent before escalation.

Language as a Pacing ToolCopy slowed the interaction just enough to reset behavior without blocking progress.

Intervention

A single clarifying message appeared when a user immediately requested a human. The message confirmed escalation was still available, reducing fear of being blocked. Its wording mirrored how an agent would naturally ask for context, creating a brief pause without stopping progress.

Why It Worked

By confirming a human option remained available, the message lowered anxiety at the moment of entry. The tone reduced resistance to engaging with the system, while the brief pause allowed intent to be captured before any handoff occurred.

Outcome

- More users shared context instead of escalating immediately.

- Deflection increased while unnecessary escalations decreased.

- Specialists received clearer requests when handoff occurred.

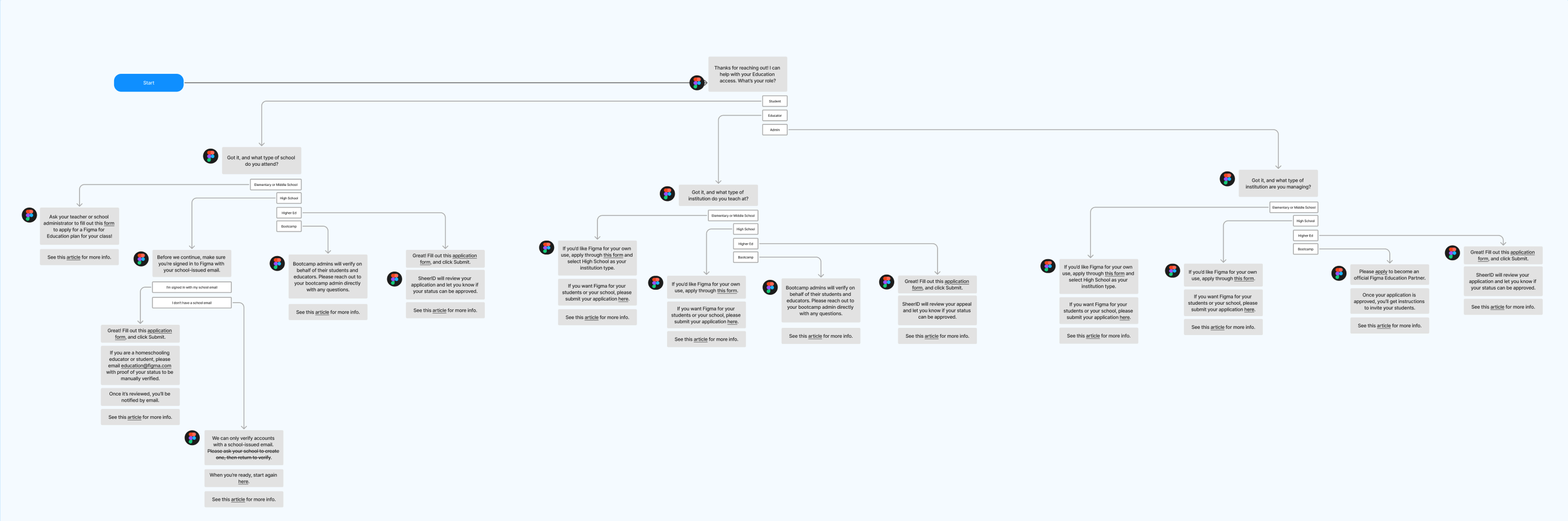

Case Example: Eligibility Verification Under Stress

Education access flow, Figma (abstracted)

At Entry

- Users arrived anxious about losing access to their files due to a required re-verification.

- The change affected a high volume of customers at once.

- The system did not know the user’s role or eligibility status on arrival.

- Multiple verification paths existed (K–12, higher education, bootcamp), but were not yet visible to the user.

Language Risks

- Presenting all requirements at once risked overwhelming already-stressed users.

- Asking role or eligibility questions too early could feel interrogative.

- Unclear sequencing risked users abandoning the flow or seeking support prematurely.

- Policy language risked sounding punitive rather than supportive.

Applied Patterns

Orient the User Before Routing

The system established what kind of help was available before asking users to identify themselves.

Progressive Disclosure Under Stress

Information and requirements were revealed step by step instead of all at once.

Context Before Resolution

The flow gathered school type and role before presenting eligibility details.

Language as a Pacing Tool

Wording guided users through the process without accelerating anxiety.

Agency Without Abandonment

Users made selections, but the system continued to lead the interaction.

Conversation Structure

The conversation was structured to acknowledge the need for re-verification without immediately listing every requirement. Instead of presenting the full process upfront, the flow guided users through a short sequence of clarifying steps. Users were first asked to identify their school type, followed by a role selection to establish eligibility context. Based on those inputs, the system delivered only the instructions relevant to that specific path, keeping the interaction focused and contained at each step.

Why It Worked

Progressive disclosure reduced cognitive overload during a high-anxiety interaction. Clear sequencing and guided language helped users complete verification without interpreting eligibility rules themselves.

Outcome

- Users completed re-verification with fewer support requests.

- Confusion and anxiety were reduced despite the scale of the change.

- Support interactions, when they occurred, started with clearer context.

- The system handled high volume without relying on one-to-one assistance.

How this Scales

People carry habits and expectations from one system into the next, and those habits shape how they enter any interaction. When the first step fails to orient them, the rest of the flow struggles to recover.

The same language patterns apply across channels, including chat, email, guided flows, voice menus, and agent-assist tools. Wherever trust is thin, a brief orienting moment that gathers context and sets direction strengthens the interaction before complexity appears.

Strong systems pay attention to the moment where users decide what kind of interaction they are in. When that moment is clear and steady, routing becomes simpler, instructions become easier to follow, and users are more likely to stay engaged through completion.

Case Study

Designing for Trust Through Language

How language decisions shape user behavior, comprehension, and outcomes in high-stakes flows.

Principles for Designing Trust Through Language

- Trust is established before a system demonstrates capability.

- Language sets emotional safety before it gathers information.

- Cognitive load must be managed explicitly in high-stress moments.

- Users should feel guided, not interrogated.

- Complexity should be revealed only when it becomes relevant.

Language Patterns in Trust-Critical Systems

Orient the User Before Routing

Make it clear what kind of help the system can offer before asking the user to choose a path.

Progressive Disclosure Under Stress

Break complex requirements into steps so users are not asked to process everything at once.

Context Before Resolution

Collect the minimum context needed before attempting to resolve the issue or apply policy.

Language as a Pacing Tool

Use wording to control the speed of the interaction when users are overwhelmed or anxious.

Agency Without Abandonment

Offer choices while continuing to guide the user, rather than handing off complexity.

Patterns in Practice

The following examples show how the same trust principles and language patterns apply in different systems and constraints.

Each case begins from a different starting condition, but uses the same approach: orient the user, manage cognitive load, and gather context before acting.

Case Example: Premature Human Bypass

Support system, Zendesk (abstracted)

At Entry

- Users entered chat with a learned habit of requesting a human immediately

- Prior experiences reinforced the belief that self-serve would not help

- The system had no opportunity to capture intent or offer relevant guidance

- Escalation was available, but context was missing

Language Risks

- Immediate escalation prevented the system from capturing intent

- Self-serve options remained unused despite being available

- Specialists received simple requests without context

- The system reinforced avoidance behavior rather than engagement

Applied Patterns

Orient the User Before RoutingThe system established what kind of help was available before asking users to identify themselves.

Context Before ResolutionA brief prompt gathered intent before escalation.

Language as a Pacing ToolCopy slowed the interaction just enough to reset behavior without blocking progress.

Intervention

A single clarifying message appeared when a user immediately requested a human. The message confirmed escalation was still available, reducing fear of being blocked. Its wording mirrored how an agent would naturally ask for context, creating a brief pause without stopping progress.

Why It Worked

By confirming a human option remained available, the message lowered anxiety at the moment of entry. The tone reduced resistance to engaging with the system, while the brief pause allowed intent to be captured before any handoff occurred.

Outcome

- More users shared context instead of escalating immediately.

- Deflection increased while unnecessary escalations decreased.

- Specialists received clearer requests when handoff occurred.

Case Example: Eligibility Verification Under Stress

Education access flow, Figma (abstracted)

At Entry

- Users arrived anxious about losing access to their files due to a required re-verification.

- The change affected a high volume of customers at once.

- The system did not know the user’s role or eligibility status on arrival.

- Multiple verification paths existed (K–12, higher education, bootcamp), but were not yet visible to the user.

Language Risks

- Presenting all requirements at once risked overwhelming already-stressed users.

- Asking role or eligibility questions too early could feel interrogative.

- Unclear sequencing risked users abandoning the flow or seeking support prematurely.

- Policy language risked sounding punitive rather than supportive.

Applied Patterns

Orient the User Before Routing

The system established what kind of help was available before asking users to identify themselves.

Progressive Disclosure Under Stress

Information and requirements were revealed step by step instead of all at once.

Context Before Resolution

The flow gathered school type and role before presenting eligibility details.

Language as a Pacing Tool

Wording guided users through the process without accelerating anxiety.

Agency Without Abandonment

Users made selections, but the system continued to lead the interaction.

Conversation Structure

The conversation was structured to acknowledge the need for re-verification without immediately listing every requirement. Instead of presenting the full process upfront, the flow guided users through a short sequence of clarifying steps. Users were first asked to identify their school type, followed by a role selection to establish eligibility context. Based on those inputs, the system delivered only the instructions relevant to that specific path, keeping the interaction focused and contained at each step.

Why It Worked

Progressive disclosure reduced cognitive overload during a high-anxiety interaction. Clear sequencing and guided language helped users complete verification without interpreting eligibility rules themselves.

Outcome

- Users completed re-verification with fewer support requests.

- Confusion and anxiety were reduced despite the scale of the change.

- Support interactions, when they occurred, started with clearer context.

- The system handled high volume without relying on one-to-one assistance.

How this Scales

People carry habits and expectations from one system into the next, and those habits shape how they enter any interaction. When the first step fails to orient them, the rest of the flow struggles to recover.

The same language patterns apply across channels, including chat, email, guided flows, voice menus, and agent-assist tools. Wherever trust is thin, a brief orienting moment that gathers context and sets direction strengthens the interaction before complexity appears.

Strong systems pay attention to the moment where users decide what kind of interaction they are in. When that moment is clear and steady, routing becomes simpler, instructions become easier to follow, and users are more likely to stay engaged through completion.

Case Study

Designing for Trust Through Language

How language decisions shape user behavior, comprehension, and outcomes in high-stakes flows.

Principles for Designing Trust Through Language

- Trust is established before a system demonstrates capability.

- Language sets emotional safety before it gathers information.

- Cognitive load must be managed explicitly in high-stress moments.

- Users should feel guided, not interrogated.

- Complexity should be revealed only when it becomes relevant.

Language Patterns in Trust-Critical Systems

Orient the User Before Routing

Make it clear what kind of help the system can offer before asking the user to choose a path.

Progressive Disclosure Under Stress

Break complex requirements into steps so users are not asked to process everything at once.

Context Before Resolution

Collect the minimum context needed before attempting to resolve the issue or apply policy.

Language as a Pacing Tool

Use wording to control the speed of the interaction when users are overwhelmed or anxious.

Agency Without Abandonment

Offer choices while continuing to guide the user, rather than handing off complexity.

Patterns in Practice

The following examples show how the same trust principles and language patterns apply in different systems and constraints.

Each case begins from a different starting condition, but uses the same approach: orient the user, manage cognitive load, and gather context before acting.

Case Example: Premature Human Bypass

Support system, Zendesk (abstracted)

At Entry

- Users entered chat with a learned habit of requesting a human immediately

- Prior experiences reinforced the belief that self-serve would not help

- The system had no opportunity to capture intent or offer relevant guidance

- Escalation was available, but context was missing

Language Risks

- Immediate escalation prevented the system from capturing intent

- Self-serve options remained unused despite being available

- Specialists received simple requests without context

- The system reinforced avoidance behavior rather than engagement

Applied Patterns

Orient the User Before RoutingThe system established what kind of help was available before asking users to identify themselves.

Context Before ResolutionA brief prompt gathered intent before escalation.

Language as a Pacing ToolCopy slowed the interaction just enough to reset behavior without blocking progress.

Intervention

A single clarifying message appeared when a user immediately requested a human. The message confirmed escalation was still available, reducing fear of being blocked. Its wording mirrored how an agent would naturally ask for context, creating a brief pause without stopping progress.

Why It Worked

By confirming a human option remained available, the message lowered anxiety at the moment of entry. The tone reduced resistance to engaging with the system, while the brief pause allowed intent to be captured before any handoff occurred.

Outcome

- More users shared context instead of escalating immediately.

- Deflection increased while unnecessary escalations decreased.

- Specialists received clearer requests when handoff occurred.

Case Example: Eligibility Verification Under Stress

Education access flow, Figma (abstracted)

At Entry

- Users arrived anxious about losing access to their files due to a required re-verification.

- The change affected a high volume of customers at once.

- The system did not know the user’s role or eligibility status on arrival.

- Multiple verification paths existed (K–12, higher education, bootcamp), but were not yet visible to the user.

Language Risks

- Presenting all requirements at once risked overwhelming already-stressed users.

- Asking role or eligibility questions too early could feel interrogative.

- Unclear sequencing risked users abandoning the flow or seeking support prematurely.

- Policy language risked sounding punitive rather than supportive.

Applied Patterns

Orient the User Before Routing

The system established what kind of help was available before asking users to identify themselves.

Progressive Disclosure Under Stress

Information and requirements were revealed step by step instead of all at once.

Context Before Resolution

The flow gathered school type and role before presenting eligibility details.

Language as a Pacing Tool

Wording guided users through the process without accelerating anxiety.

Agency Without Abandonment

Users made selections, but the system continued to lead the interaction.

Conversation Structure

The conversation was structured to acknowledge the need for re-verification without immediately listing every requirement. Instead of presenting the full process upfront, the flow guided users through a short sequence of clarifying steps. Users were first asked to identify their school type, followed by a role selection to establish eligibility context. Based on those inputs, the system delivered only the instructions relevant to that specific path, keeping the interaction focused and contained at each step.

Why It Worked

Progressive disclosure reduced cognitive overload during a high-anxiety interaction. Clear sequencing and guided language helped users complete verification without interpreting eligibility rules themselves.

Outcome

- Users completed re-verification with fewer support requests.

- Confusion and anxiety were reduced despite the scale of the change.

- Support interactions, when they occurred, started with clearer context.

- The system handled high volume without relying on one-to-one assistance.

How this Scales

People carry habits and expectations from one system into the next, and those habits shape how they enter any interaction. When the first step fails to orient them, the rest of the flow struggles to recover.

The same language patterns apply across channels, including chat, email, guided flows, voice menus, and agent-assist tools. Wherever trust is thin, a brief orienting moment that gathers context and sets direction strengthens the interaction before complexity appears.

Strong systems pay attention to the moment where users decide what kind of interaction they are in. When that moment is clear and steady, routing becomes simpler, instructions become easier to follow, and users are more likely to stay engaged through completion.